In July 2017, an epic rebuke created waves on the internet, as two tech titans clashed. Yes, we are talking about the now infamous Elon Musk and Mark Zuckerberg cyber spat. The bone of contention was AI, and its impact on humanity in the future.

Musk has repeatedly sounded the alarm bells on AI and the havoc it could cause if not put on leash. He impressed upon these points in the National Governors Association Meet held at Providence, Rhode Island, earlier in July. Post this, Zuckerberg, in his Facebook Live chat, dismissed Musk’s claims and called him a naysayer. In fact, he called the Tesla Motors’ founder ‘irresponsible’ for such a negative outlook.

Not one to be left behind, Musk posted this response on Twitter:

While the spat will soon vanish from our memories, the spark remains ignited: are Musk’s claims well-founded? Do we need AI regulations?

First things first. What is AI?

To most of us, AI is a robot from the future that can do incredible things, including shape shifting. Thank you, Terminator, for that glamorous image of AI. But seriously, AI is not just a robot. Robots are containers for artificially-intelligent systems working in the background, making high-quality decisions.

According to AI researchers, there are three types of AI:

- Artificial Narrow Intelligence (ANI)

These perform only specific tasks, like the Google AI that beat the world’s current champion in the ancient Chinese game, Go. It can do this and this task alone. Another example is the self-driven car that will hit the roads soon (and has already caused a death during trial phase).

Quite recently, the first loan that the BRICS Development Bank — a financial institution set up jointly by Brazil, Russia, India, China and South Africa — has approved for Russia is meant to fund a project that includes the use of AI in Russian courts to automate trial records using speech recognition.

- Artificial General Intelligence (AGI)

Artificial General Intelligence thinks on par with humans. Imagine having a sane conversation with machines? Today’s chatbots might soon achieve that (with copious amounts of training, of course.) AGI is incredibly beneficial for us – building smarter homes, performing complex medical surgeries, eliminating loss of humans in wars, and much more.

- Artificial Super Intelligence (ASI)

Creating something that’s much more intelligent than us? How will you control that one? Imagine having an army person who is incredibly strong that even tanks and missiles can’t harm, and is well-versed with all defence secrets. What happens when this commando goes rogue? Now imagine this in a real-world level.

Apocalyptic AI?

It’s not just Elon Musk that’s warning us about ‘summoning the demon’ with AI. Stephen Hawking and Bill Gates are telling us to be cautious too. We might empower computers to take high quality decisions that may be right from a machine perspective but incorrect from a human perspective.

Remember the Midas story? The greedy king asks for a boon by which all that he touches becomes gold. The wish is granted, not counting the human loss (Bacchus, the Roman God who grants the boon wants to teach Midas a lesson. When Midas accidently turns his daughter into gold, Bacchus reverses it). Apply this situation in today’s world. A super-intelligent system, that doesn’t possess the emotions that we do, and can thwart all our schemes to defeat it, is in fact a demon! Think Skynet gone live.

It is possible that AI research may go out of hand and create self-evolving intelligent system that may prioritize its survival over humans. While a total human extermination may or may not happen, the encroachment of AI on predominantly human jobs is expected.

Already, the self-driven cars are taking centerstage, which will put cab drivers out of work.

Alibaba’s Jack Ma believes that excessive application of AI will lead to widespread chaos as unemployment will soar. He views a future of increasing divide among Haves and Have Nots, and geopolitical discord, as AI will cause power to be consolidated in the hands of a few. He goes on to say that the rise of AI will lead to a World War III. His reasoning is simple – “The first technology revolution caused World War I. The second technology revolution caused World War II. This (Artificial Intelligence) is the third technology revolution.”

Do any AI Regulations exist at the moment?

AI researchers are divided on the need for regulations. Some feel the regulations would prove to be detrimental to important technical advancements, as mentioned in this Stanford University report. They urge for tough transparency requirements and meaningful enforcement, as against narrow compliance that companies answer to in letter but not in spirit. Some are working towards building base AI principles that would guide researchers towards building safe and beneficial AI, and are backed by the likes of Elon Musk, Stephen Hawking, Google, Amazon, Microsoft, to name a few.

Partnership on AI

The big names of Silicon Valley have come together to form the Partnership on AI that provides a platform for researchers, scientists, policymakers and public to share knowledge. The group has thematic pillars which root for safe and accountable AI.

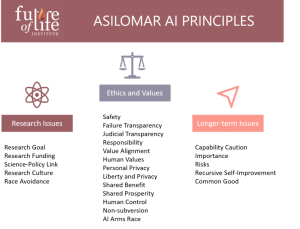

Asilomar AI Principles

In Feb 2017, leading AI researchers convened at the 2017 Beneficial AI conference, Asilomar, California. The group discussed the advancement of human-friendly AI and suggested regulatory principles.

European Union and US Government say yes to AI regulation

The European Union, too, published a document in 2016, with the intention of putting AI regulations into place by 2018. Similarly, the US Government has also stressed on the need for AI regulations.

The essentiality of AI Regulatory bodies

With businesses around the world investing heavily in AI without heeding precautions or repercussions, AI regulations, are indeed the need of the hour. But, excessive regulation that is misguided will only stifle innovation. Thus, it is essential for lawmakers and researchers to work hand-in-hand to form an AI regulatory body that would:

- assess the goal of the AI project,

- understand its benefits/disadvantages,

- provide for any countereffects and ensure public safety

Having a law does not prevent cybercrimes, nor will it prevent antisocial elements from using AI for self-serving avarice. Effective enforcement is crucial, and it is best if the regulatory body is formed as early as possible, because – Artificial Super Intelligence is coming, we just don’t know when.